We are modeling the development of social communication on our robotic platforms, namely an upper-torso child-like humanoid, Infanoid, and a simple creature-like robot, Keepon. We implement in these robots the functions of eye-contact and joint attention, which play an important role in coordinating mutual attention and action.

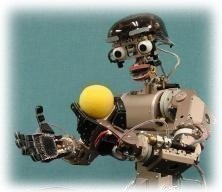

Infanoid is our major research platform. It is an upper-torso humanoid robot which is as big as a 3- to 4-year-old human child. Currently, 29 actuators (mostly DC motors with encoders and current-sensing devices) and a number of sensors are arranged in this relatively small body. It has two hands, each of which has four fingers and a thumb that are capable of pointing, grasping, and a variety of other hand gestures.

The head of Infanoid has two eyes, each of which contains two different color CCD cameras for peripheral and foveal view; the eyes can perform saccadic eye movement and smooth pursuit of a visual target. The video images taken by the cameras are fed into a cluster of PCs for real-time detection of human faces (by skin-color filtering and template matching) and of physical objects such as toys (by color/motion segmentation). The distance to the faces and objects can also be computed from the disparity between the left and right images.

Infanoid has lips and eyebrows to produce various expressions, like those exemplified in the pictures below. The lips also move in accordance with the sound produced by a speech synthesizer. By changing the inclination of, and the gap between, the lips, it expresses a variety of emotional states.

- More on Specification of Infanoid

- More on History of Infanoid

From the microphones at the positions of the two ears, Infanoid hears human voice and analyzes the sound into a sequence of phonemes; the robot does not have any a priori knowledge of language (like lexicon or grammar). It also recognizes the change in the fundamental frequency to extract emotional contour from human speech. By feeding the output of the voice analyzer into a speech synthesizer, Infanoid performs vocal imitation while sharing attention with the interactant, which we consider to be a precursor to the primordial phase of language acquisition.

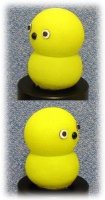

The creature-like robot, Keepon (pronounced, "key-pong") is designed to perform emotional and attention exchange with human interactants (especially, children) in the simplest and most comprehensive way.

Keepon has a yellow snowman-like body. The upper part (the "head") has two eyes, each of which is a color CCD camera with a wide-angle lens (120 degrees horizontally), and a nose, which is actually a microphone. The lower part (the "belly") has a small gimbal and four wires, by which the body is manipulated like a marionette; four motors and two circuit boards (a PID controller and a motor driver) are installed in the black cylinder. Since the body is made of silicone rubber and its inside is relatively hollow, Keepon's head and belly deform whenever it changes posture and when someone touches it.

- More on Specification of Keepon

- More on History of Keepon

This simple body has four degrees of freedom: nodding (tilt) within +/-40 degrees, shaking (pan) within +/-180 degrees, rocking (side) within +/-25 degrees, and bobbing (shrink) with a 15-mm stroke.

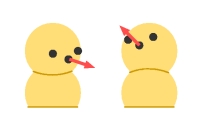

These four degrees of freedom produce two qualitatively different types of action:

- Attentive action

Directing the head up/down and left/right so as to orient Keepon's face/body to a certain target in the environment. Keepon seems to be perceiving the target. This action includes eye-contact and joint attention.

- Emotive action

Keeping its attention in a certain direction, Keepon rocks its body from side to side and/or bobs its body up and down. Keepon seems to express emotions (like pleasure and excitement) about the target.

Since Keepon is only capable of these two kinds of action, human interactants (even children) will easily understand what Keepon perceives and how Keepon evaluates it.

Looking into children's social development, we believe that a robot that is capable of eye-contact and joint attention deserves to be a socially interactive partner, which leads us to believe in the existence of a "mind". In other words, an agent we relate to in terms of attention and emotion can be considered as a social interactant to which we attribute a "mind". This is our basic motives for building Infanoid and Keepon, which are capable of eye-contact and joint attention.

The eye-contact capability is implemented in Infanoid and Keepon as follows. First, from the real-time image streams (30 frames/sec) taken by the cameras, the robots search for a human face looking straight using a skin-color filter and average-face templates. If a face is detected, the robots drive the motors to direct the gaze/face/body toward the detected face. Infanoid, for example, gives an appropriate amount of eye-convergence according to the distance to the face computed from the image disparity. Then the gaze of the robot and that of the human interactant are facing straight at each other, establishing eye-contact between the human and the robot.

The joint attention capability is implemented in Infanoid and Keepon as follows. The robots first generates several hypotheses about the direction of the face being tracked. From the images taken from the cameras (including the foveal ones in the case of Infanoid), the robots compute the likelihood for each of the hypotheses and select the most likely direction of the face. Then, the robots start searching in that direction and identify the target of the human attention. Currently, the target object is segmented out from the background by a predetermined color (e.g. toys with saturated colors) and motion. Finally, the robots drive the motors to direct the gaze/face/body toward the target object, thus establishing joint attention with the human interactant.